Rationale

As modern astrophysical surveys deliver an unprecedented amount of data, from the imaging of hundreds of millions of distant galaxies to the mapping of cosmic radiation fields at ultra-high resolution, conventional data analysis methods are reaching their limits in both computational complexity and optimality. Deep Learning has rapidly been adopted by the astronomical community as a promising way of exploiting these forthcoming big-data datasets and of extracting the physical principles that underlie these complex observations. This has led to an unprecedented exponential growth of publications with in the last year alone about 500 astrophysics papers mentioning deep learning or neural networks in their abstract. Yet, many of these works remain at an exploratory level and have not been translated into real scientific breakthroughs.

The goal of this ICML 2022 workshop is to bring together Machine Learning researchers and domain experts in the field of Astrophysics to discuss the key open issues which hamper the use of Deep Learning for scientific discovery.

An important aspect to the success of Machine Learning in Astrophysics is to create a two-way interdisciplinary dialog in which concrete data-analysis challenges can spur the development of dedicated Machine Learning tools. This workshop is designed to facilitate this dialog and will include a mix of interdisciplinary invited talks and panel discussions, providing an opportunity for ICML audiences to connect their research interests to concrete and outstanding scientific challenges.

We welcome in particular contributions that target, or report on, the following non-exhaustive list of open problems:

- Efficient high-dimensional Likelihood-based and Simulation-Based Inference

- Robustness to covariate shifts and model misspecification

- Anomaly and outlier detection, search for rare signals with ML

- Methods for accurate uncertainty quantification

- Methods for improved interpretability of models

- (Astro)-physics informed models, models which preserve symmetries and equivariances

- Deep Learning for accelerating numerical simulations

- Benchmarking and deployment of ML models for large-scale data analysis

Contributions on these topics do not necessarily need to be Astrophysics-focused, works on relevant ML methodology, or similar considerations in other scientific fields, are welcome.

Program

Confirmed Invited Speakers and Panelists

Josh Bloom

UC Berkeley

Katie Bouman

Caltech

Daniela Huppenkothen

SRON

Jakob Macke

Tübingen University

Laurence Perreault-Levasseur

University of Montreal

Dustin Tran

Google

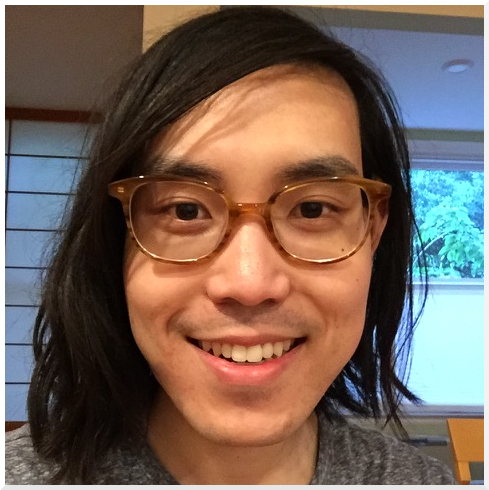

George Stein

UC Berkeley

Soledad Villar

Johns Hopkins UniversityWorkshop Schedule

All times are in Eastern Time. Please visit the ICML Workshop Page for live schedule (requires registration).

| Introduction | Welcome and Introduction of the Workshop | |

| Keynote | Jakob Macke: Simulation-based inference and the places it takes us | |

| Break | Morning Coffee Break | |

| Spotlight | Aritra Ghosh: GaMPEN: An ML Framework for Estimating Galaxy Morphological Parameters and Quantifying Uncertainty | |

| Spotlight | Ioana Ciuca: Unsupervised Learning for Stellar Spectra with Deep Normalizing Flows | |

| Spotlight | Siddharth Mishra-Sharma: Strong Lensing Source Reconstruction Using Continuous Neural Fields | |

| Keynote | Katherine Bouman: Capturing the First Portrait of Our Milky Way's Black Hole & Beyond | |

| Break | Lunch Break | |

| Keynote | Dustin Tran: Uncertainty Quantification in Deep Learning | |

| Spotlight | Chirag Modi: Reconstructing the Universe with Variational self-Boosted Sampling | |

| Spotlight | Yuchen Dang: TNT: Vision Transformer for Turbulence Simulations | |

| Break | Afternoon Coffee Break | |

| Keynote | Soledad Villar: Equivariant machine learning, structured like classical physics | |

| Spotlight | Kwok Sun Tang: Galaxy Merger Reconstruction with Equivariant Graph Normalizing Flows | |

| Spotlight | Denise Lanzieri: Hybrid Physical-Neural ODEs for Fast N-body Simulations | |

| Spotlight | Tri Nguyen: Uncovering dark matter density profiles in dwarf galaxies with graph neural networks | |

| Panel Discussion | Enabling Scientific Discoveries with ML | |

| Poster Session | Main Poster Session |

Accepted Contributions

Call for Abstracts

We invite all contributions in connection with the theme of the workshop as described here, from the fields of Astrophysics and Machine Learning, but also from other scientific fields facing similar challenges.

Original contributions and early research works are encouraged. Contributions presenting recently published or currently under review results are also welcome. The workshop will not have formal proceedings, but accepted submissions will be linked on the workshop webpage.

Submissions, in the form of extended abstracts, need to adhere to the ICML 2022 format (LaTeX style files), be anonymized, and be no longer than 4 pages (excluding references). After double-blind review, a limited set of submissions will be selected for contributed talks, and a wider set of submissions will be selected for poster presentations.

Please submit your anonymized extended abstract through CMT at https://cmt3.research.microsoft.com/ML4Astro2022 before May 23rd, 23:59 AOE.

Logistics and FAQs

ICML 2022 is currently planned as an in-person event. As such, this workshop is currently assuming a hybrid format, with physical poster sessions and in-person speakers, but with support for virtual elements to facilitate participation of people unable to travel. We encourage all interested participants (regardless of their ability to physically travel to ICML) to submit an extended abstracts.

Registration for ICML workshops is handled through the main ICML conference registration here. The workshop will be able to guarantee the ICML registration for participants with accepted contributions.

Inquiries regarding the workshop can be directed to icml2022ml4astro@gmail.com

Important Dates

- Submission deadline: May 23rd

- Author Notification: June 10th

- Slideslive upload deadline for online talks: July 1st (SlidesLive will only guarantee your recording will be available in time for the conference if you respect the official July 1st deadline, so we highly encourage you to submit it by then.)

- Camera-ready paper deadline: July 10th

- Camera-ready poster deadline: July 15th (see instructions below)

- Workshop date: July 22nd

Instructions for Posters

To prepare your poster, please use the following dimensions:

- Recommended size: 24”W x 36”H, 61 x 90cm (A1 portrait)

- Maximum size: 48”W x 36”H, 122 x 90 cm (A0 landscape)

- Please use lightweight paper for printing, poster boards may not be available for workshops, but you will be able to tape your poster to the wall using provided tape.

In addition to the physical poster, please visit this page for instructions on how to upload the camera-ready version of your poster to the ICML website: https://wiki.eventhosts.cc/en/reference/posteruploads You will be able to submit your poster at this link: https://icml.cc/PosterUpload

SOC

Scientific Organizing Committee for the ICML 2022 Machine Learning for Astrophysics workshop:

Francois Lanusse

CNRS (Co-Chair)

Marc Huertas-Company

IAC (Co-Chair)

Vanessa Boehm

UC Berkeley

Brice Ménard

Johns Hopkins University

Xavier J. Prochaska

UC Santa-Cruz

Uros Seljak

UC Berkeley

Francisco Villaescusa-Navarro

Simons Foundation